Let us conclude whether surgical robots be brought under the law if they fail in action

In recent years, professionals have developed medical robots & chatbots to monitor fragile elderly and aid with simple duties. Some mentally ill people benefit from artificial intelligence-driven treatment applications; drug ordering systems help physicians avoid harmful combinations between multiple prescriptions, and assistance equipment makes surgery more accurate and safe. These are only a few instances of medical technological advancements.

The rapid use of AI in medicine also creates a crucial liability issue for the medical field: who should be held liable if these devices fail? Not only will getting this accountability question right be vital for patient rights, but it will also be critical for providing appropriate incentives for the political economy of development and the medical labor market.

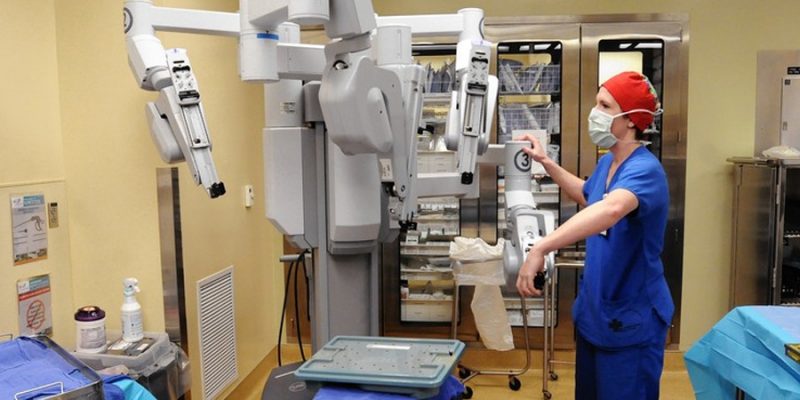

Malfunctions of Surgical Robots

Individual surgical facilities have reported on numerous software-related, mechanical, & electrical malfunctions that occurred before or during robotic surgeries. These investigations found rates of device malfunction and failure-related conversions ranging from 0.4 to 8.0 percent and 0.1 to 2.7 percent, respectively, with an average of 3 percent (95 % confidence interval (CI), 1.9–4.2) and 0.9 percent (95 percent confidence interval (CI), 0.4–1.4). There were five primary kinds of device & instrument malfunctions that had an impact on patients, either by causing injuries and problems or by disrupting surgery and/or lengthening process times. The surgical teams’ malfunction classifications and actions weren’t mutually exclusive, and in many situations, multiple defects or actions were recorded in a single incident.

Prevailing Legal Framework

The failure of a robotic device in surgery raises questions about the convoluted legal duties and obligations of the numerous parties involved. Professional responsibility in medicine is governed by the same legal grounds that apply to robotic surgery. The difficulty in assessing culpability, given that the usage of sophisticated medical equipment is a vital element of a patient’s treatment, contributes to the litigation’s complexity. As a result, if an unfavorable outcome occurs, culpability may lie on the surgeon conducting the surgery, the robotic device maker, or both. As a result, robotic surgery lawsuit frequently blends medical malpractice with liability law, even though the two are wholly independent. In malpractice situations, the responsibility rule is carelessness. This means that a doctor’s failure to provide the best level of treatment based on common practice must be the direct cause of a patient’s serious damage.

Need for Tougher Law?

In a strict liability standard, even if the maker, distributor, or retailer of the goods was not negligent, they might be held accountable in the event of an adverse incident. To put it another way, even a well-designed and deployed system may be held accountable for errors. Even if there is no finding of wrongdoing, strict product liability might result in a judgment against the manufacturer. This may appear to be overly strict criteria. However, the concept encourages continuous technological advancements, which may remain overly error-prone and reliant on obsolete or non – representative sets of data if the law sets excessively high recovery requirements.

A rigorous responsibility requirement would also serve as a deterrent to premature automation in industries where human skill is still required. There has long been a norm of skilled professional supervision and monitoring of innovative technology deployment in the medical industry. Compensation is needed when substituted automation short-circuits that review and an avoidable adverse event occurs. State legislatures may set a cap on pay to prevent excessive deterrence of innovation. However, compensation is still owed since the injury might have been averted if a “person in the loop” had been there.

Courts will encounter expected attempts by AI owners to escape accountability as they construct such developing standards of care. Policymakers are finding it difficult to keep up with the rapid rate of technological advancement. Legislators are concerned about stifling the industry’s development and innovation. However, public demand for legislative interventions and safeguards in the area of essential technology is growing. These requirements do not have to stifle economic or technical progress. If buyers aren’t confident that someone will be held responsible if an AI or robot fails tragically, such innovations may never gain popularity. Developing adequate accountability criteria along the lines outlined above might reassure patients while also improving the quality of healthcare AI and robots.